Trusted Autonomy and Human-Machine Systems

Advances in artificial intelligence, automation and autonomous systems are driving strategic investments in defence science and technology areas that utilise Australia’s national innovation framework, consisting of publicly funded research agencies, academia and industry. In this perspective, the 2016 Defence Industry Policy Statement identified areas where scientific and technological progress is substantially transforming Defence operations as well as high potential game-changing technologies. Defence operating domains being transformed by science and technology innovations include:

- Cyber Electronic Warfare

- Integrated ISR

- Space Capabilities

- Undersea Warfare

The Defence Industry Policy Statement also identifies the following potentially game changing technology areas:

- Trusted Autonomous Systems

- Enhanced Human Performance and Resilience

- Hypersonics technology

- Quantum Technologies

- Multidisciplinary Material Sciences

Trusted Autonomous Systems (TAS) is therefore a top priority and it is considered worthy of investment to provide the Australian Defence Force (ADF) a distinctive edge in today’s and future battlespace. Australian defence has succeeded in leveraging its capability as one of the world leaders in field robotics involving the development of automated systems to perform specific tasks, which are hazardous/difficult for the human to accomplish. However, the ADF needs novel autonomous systems that can be trusted in adversarial, congested, contested and complex environments where the consequences of errors or failures can have detrimental impacts on the successful completion of a defence mission. Therefore, TAS emerges as one of the key areas that requires substantial research and development efforts in order to modernise and improve ADF operations. Such efforts will provide increased capability across the battlespace and simultaneously reduce risk to personnel, leading to a reduction of the acquisition cost and future operations. In a network-centric environment (also during coordinated manned-unmanned operations), TAS provides the capability to increase the coherency of effort and operational pace of the ADF. Furthermore, TAS will become a key aspect of future third offset operations with Australia’s allies.

Considering these aspects, a Defence-led Cooperative Research Centre in Trusted Autonomous Systems (DCRC-TAS) was established to provide clear pathways for implementation and uptake of such technologies into deployable defence programs and capabilities. The CRC is led by the Defence Science and Technology (DST) Group and comprises of a fundamental research program and a functional technology program, together leading to a set of agile capability demonstrators showcasing TAS. It is planned that the fundamental research program will focus on areas such as machine cognition, human-autonomy integration and persistent autonomy. The functional technology program will focus on management of uncertainty and unpredictability, persistent perception, multi-modal fusion, self-healing systems and aggressive actuation technologies. By capitalising on the fundamental research and functional technology programs, the capability demonstration program is planned to showcase integrated capabilities at a level that is realistic against ADF requirements. A critical focus of the capability demonstrator will be on demonstrating trusted and resilient autonomy.

In order to bring different stakeholders and to establish the planned DCRC-TAS, information sessions related to the formation of the new CRC were organised at Melbourne, Sydney, Adelaide and Brisbane by the DST Group. Due to our track record in UAS research (and participation to previous symposia and research networking initiatives organised by DSTO and DST Group), we were formally invited by DST Group to these roadshow events and we participated to the information sessions focusing on the DCRC-TAS research requirements and overall program structure. In addition to these information sessions, we organized dedicated meetings in our research laboratories in the Sir Lawrence Wackett Aerospace Research Centre. This information session were attended by some of the key DST Group personnel involved in the DCRC-TAS program and RMIT academics who are research active in the area of TAS. At that time, DST Group sought Request for Information (RFI) in connection with research and development into Trusted Autonomous Systems (TAS) for the DCRC-TAS. Our contributions to the DCRC-TAS RFI included submissions in the following areas:

- Cognitive and Adaptive Human-Machine Interfaces and Interactions

- Intelligent Decision Support Systems for Real-Time Adaptive Tactical Planning

- A Unified Approach to State Estimation and Decision Making in Trusted Autonomous Systems

- CNS Integrity Monitoring and Augmentation

- Autonomous Navigation and Tracking in GNSS-Denied and Jamming Environments

- Bio-inspired Navigation and Tracking for Trusted Autonomous Platforms

- Multi-Sensor Data Fusion for Autonomous Navigation and Tracking

- Turing Test-based Verification of Trusted Autonomous Platforms

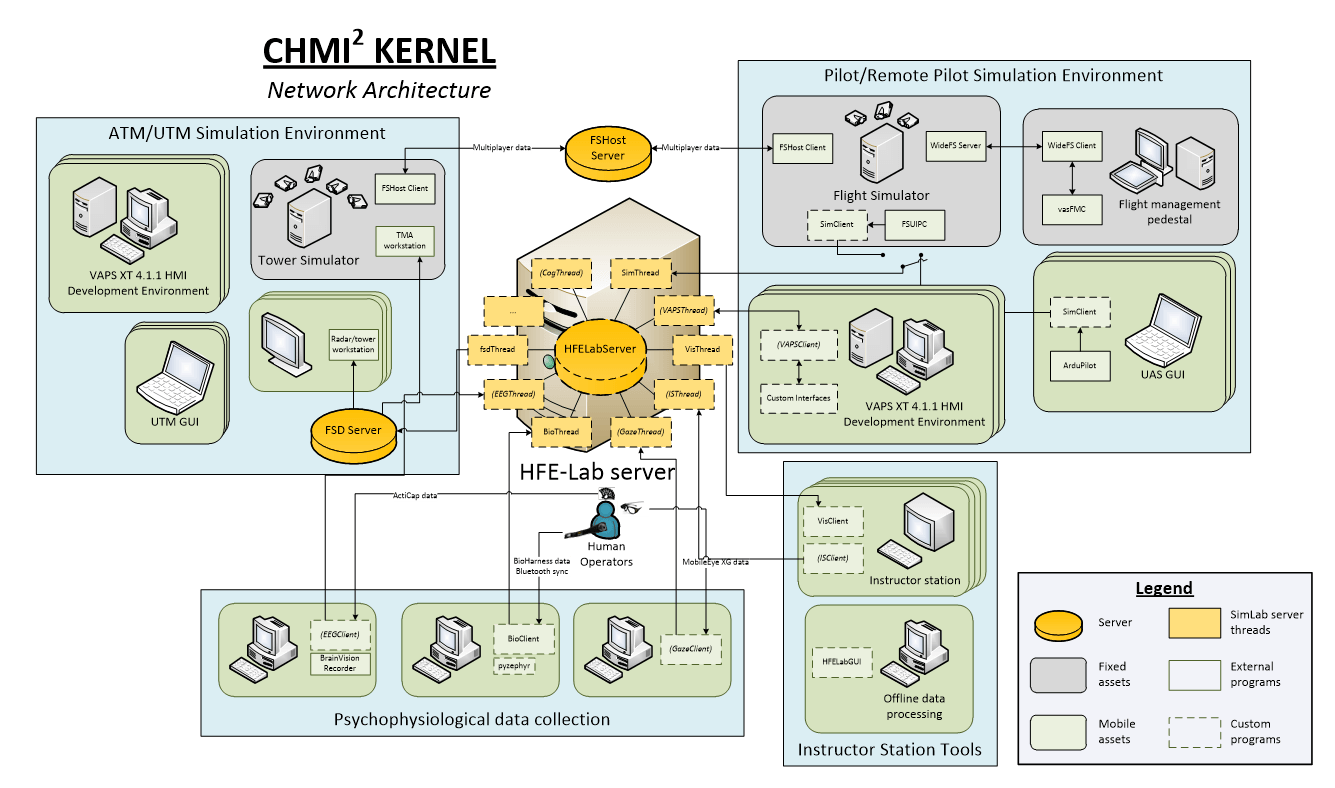

Future human-machine systems will progressively become more interconnected, with higher autonomy and data-sharing across manned and unmanned platforms. With the increasing reliance on intelligent systems, the interactions between the users of such systems are shifting from control to collaboration. This reserach program addresses the challenge of developing Human-Machine Interfaces and Interactions (CHMI2) for future Aerospace, Defence and Transport (ADT) applications to optimise both operator and machine decision making in complex operational environments. This involves the application of human factors principles in the design of advanced decision support systems and assoaciated display, command and control functions. In this context, four research areas have been identified and are briefly outlined below.

Area 1: Optimal trust and collaboration

In current ADT applications, information is exchanged in two layers: the physical layer is where human perception and intervention occurs, while the cybernetic layer is where digital data is exchanged and processed by machines. In future transport systems, these two layers will be even more tightly interwoven, requiring advances in various domains including: information management, data fusion and decision making especially at the cyber-physical interface. Cognitive systems must be engineered to assess the trustworthiness of the information provided to/by the operator, and must incorporate models that predict operator trust, mistrust and distrust.

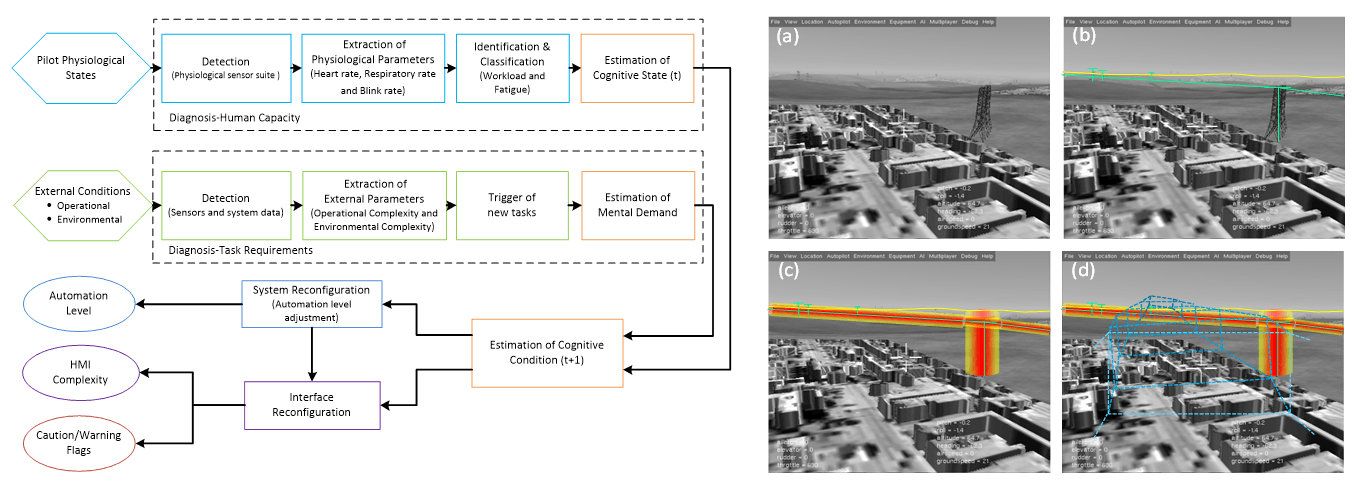

Area 2: Cognitive systems and human cognition modelling

To achieve optimal trust and collaboration between agents, cognitive systems first have to be engineered for cognition – being able to develop higher levels of abstraction by combining observations and knowledge, and to apply these abstractions to predict and adapt to unfamiliar situations – utilizing technologies in machine learning and artificial intelligence. Machine cognition can also be used to infer the operator’s cognitive states based on the operator’s inputs at the cyber-physical interface as well as by using measurements from psychophysiological sensors, such as brain, eye or heart activity. A crucial aspect of the operator’s cognition that needs to be considered is the perception of time. Different tasks have different timescales, each requiring specific predictive and reactive indicators.

Area 3: Augmentation of human-machine teams

Cognitive systems augment human-machine performance by synthesizing information outside the cognitive boundaries of operators. Such information synthesis might comprise digital communications from other cognitive systems in the cybernetic domain, making predictions based on large volumes of digital data, and/or the recognition of complex patterns in multiple dimensions. Thanks to these capabilities, cognitive systems can provide intelligent decision support to operators, complementing operator risk assessment and decision making in multi-platform situations. Conversely, the operator’s higher levels of decision making capabilities provides an objective point-of-reference to refine, correct and augment the autonomous system’s model of the physical world. In future ADT applications, such systems must operate as a distributed interlinked network, supporting greater inter-platform coordination, collective and collaborative decision making, as well as decentralized and diversified control.

Area 4: Adaptive human-machine interfaces and interactions

Based on the synthesized information, cognitive systems provide intelligent decision support and task allocation to both machines and operators, supporting the dynamic sharing/allocating of authority through novel Cognitive Human-Machine Interfaces and Interactions (CHMI2). Suitable formats, functions and controls are required to support adaptive modes of interactions between the operator and the system. To achieve optimal human-machine teaming, the CHMI2 must dynamically adapt the information content (e.g., display formats and functions) supplied to the operator and the associated command/control (C2) functions. The adaptation is driven by the cognitive system’s assessment of the uncertainty/integrity associated to the synthesis process, the inferred context/scenario as well as the operator’s cognitive and performance metrics.